Purva Paroch

Deepfakes are used for referring to manipulated videos, or any other digital representations, that are produced by sophisticated artificial intelligence. Most of the time this yields concocted images and sounds that appear to be real.

Faking content is nothing new, however, deepfakes anchor powerful techniques from machine learning and artificial intelligence to exploit or generate visual and audio content that is most likely to deceive. The main machine learning methods that are used to create deepfakes are based on deep learning and require training generative neural network architectures, such as auto encoders or generative adversarial networks (GANs).

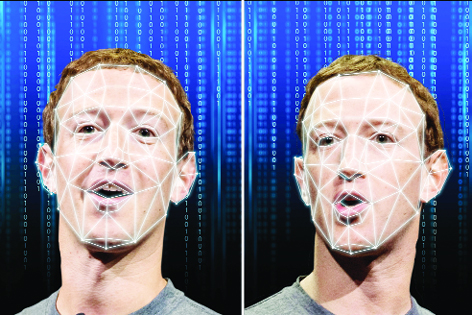

Deepfake, which is a portmanteau of ‘deep learning’ and ‘fake’, is a form of artificial intelligence. Deepfake is what we would call 21st century’s answer to Photoshop. We can put words in a politician’s mouth to star in a movie, all with the help of a computer and an Internet connection. The use of this technology is, however, of deep concern because people start believing in such fake events. It is not possible to distinguish such fake content from legitimate content with the normal eye as it requires in-depth analysis. Recently, two artists from an ad agency created a deepfake of Facebook’s founder Mark Zuckerberg. The video saw Mr. Zuckerberg saying a lot of things that he never actually said. This deepfake video was uploaded on Instagram and shared over many platforms. Deepfake technology has been around since the beginning of the 1990s, and was first implemented by amateurs in online communities. Deepfakes themselves were born in 2017 when a Reddit user of the same name posted doctored video clips on the website. Deepfakes require creators that can impersonate the source’s voice and gestures. This implementation was seen in a video produced by BuzzFeed featuring comedian Jordan Peele using After Effects CC and FakeApp. They pasted Peele’s mouth over Barack Obama’s. Obama’s jaw line was replaced with the one that followed Peele’s mouth movement, and then they used FakeApp to refine the footage for more than 50 hours of automatic processing.

Deepfakes have garnered widespread attention for their uses in fake news, hoaxes, and financial fraud. This has elicited responses from both industry and government to detect and limit their usage.

To the common person, a deepfake video seems to be a person doing some action or speaking something. To create such videos, the original face of the subject is used to superimpose multiple images of the target from different angles.

Firstly, thousands of face shots of two people are run through an AI algorithm called an encoder. The role of the encoder is to find and learn similarities between the two faces. Then it reduces them to their shared common features, while compressing the images. In the next step, another AI algorithm, called the decoder is used. The decoder is taught to recover the faces from the compressed images. This is done because the faces are different, so one decoder is trained to recover the first person’s face and another decoder is trained to recover the second person’s face. In order to swap the faces, the encoded images are fed into the “wrong” decoder. For instance, if we consider the persons to be A and B, a compressed image of A’s face will be fed into the decoder trained on B’s face. The decoder will then reconstruct the face of B, but with expressions and orientation of A’s face. To make a convincing video, this same process has to be repeated on every frame. AI and machine learning-based tools are used to generate such content. Such videos can be detected by using deepfake detection services that are being developed.

Deepfake Detection

Not all deepfakes are created with the utmost precision, since a lot of processing power is required for the most sophisticated algorithms. The quality of a deepfake depends on the resources that are available to the creator. This makes it somewhat possible to identify if a video or image is actually fake. One must remember that deepfakes are really convincing, but in most cases, there are loopholes that can help us to detect the discrepancies in the videos.

Variability in subject’s hair

One area that is often a giveaway is the hairstyle of the subject in the video – fake people don’t get frizz or flyaways because individual hairs won’t be visible. This is because fake videos usually have trouble generating realistic hair.

Discrepancy in the subject’s eyes

Blinking and a moving gaze are minute movements that are typical of a real person. One of the biggest challenges faced by deepfake creators is to generate realistic eyes. A person in the middle of a conversation follows the person they’re talking to with their eyes. In case of a deepfake, it is possible to detect a lazy eye, an odd gaze or even an unblinking person altogether.

Unreliability in teeth

Much like hair, it is tough to generate teeth individually. Artificially synthesized teeth tend to look like monolithic white slabs because algorithms are not advanced enough to learn about details.

Disparity in the facial profile

In a fake video, the subject spewing untrue information often looks odd when they turn away from the camera. In a manipulated video, by means of face swapping, the subject may appear to be facing the wrong direction or their facial ratio may become skewed when the subject is looking away.

Introspecting on a bigger screen

The inconsistencies of a fabricated video are less likely to be discovered on a smaller smart phone screen. While watching a video in full screen mode on a desktop monitor or a laptop makes it easier to discern these visual discordances of editing and can reveal other contextual inconsistencies between the supposed subject of the video and the information being provided by them. For example, a clip of someone claiming to be in UK is playing but against the backdrop, one can identify a car driving on the wrong side of the road. One can also use video editing software like Final Cut to slow the playback rate and zoom in to examine the subject’s face.

Examining the emotionality of the video

Usually, extraordinary headlines just so happen to appear around major events like elections or disasters, a video that has been deepfaked to tug at the heartstrings of people or fuel outrage amongst the people. We are neurologically wired to pay more attention to what is sensational, surprising, and exciting. This leads to sharing the message without first checking its authenticity. This is one of the many psychological factors that aid the effectiveness of fake news. If a video is eliciting a strong emotional reaction it could be a sign that it should be fact-checked before being reposted.

Deepfakes will equalize the access to creativity tools in entertainment, enable new forms of content, and make it possible for virtual beings to exist. There are reasons to be excited about as well as reasons to be concerned about the implementations of this technology. The problem is that deepfakes don’t have to flood social media platforms in order to spread disinformation.

There is a perpetual competition between the creators of deepfakes and those who are detecting them. Deepfakes continue to find their use in fields of entertainment, harassment, and academic study, so it is unlikely to hear the end of it anytime soon. We can hope to achieve the pace to develop the detection systems at which these deepfakes are being created.

(The author is a Computer Engineering student at Yogananda College of Engineering and Technology, Jammu)